- Maker: Holland Cuttrell

- Genre: Eversion

- Level: Graduate

- Program: Composition, Rhetoric, and Digital Media

- Course: WRIT 5400: Technical Writing

- Instructor: Dr. Eric Mason

- Semester Created: Winter 2020

Description:

Even if you’ve never heard of a plumbob, you’re probably familiar with a video game series that first hit computers nearly 20 years ago: The Sims. The Sims is a life simulation video game where the player acts as a sort of god figure who controls the world that player ‘s “Sims” find themselves in. The “plumbob” is a subtle but important feature used in the game to identify which Sim the player currently controls; the marquise-cut diamond shape (or plumbob, as it’s affectionately called by The Sims fanbase) floats above the active Sim’s head. Traditionally, the plumbob changed colors depending on a Sim’s emotional state, with green representing happiness, blue signifying sadness, and so forth. My project plumbob is a 3-d printed object that functions very similarly. Users near the plumbob can vocalize how they’re feeling and my plumbob will light up with a color that corresponds to the emotion the user states.

Reflection:

How It Works

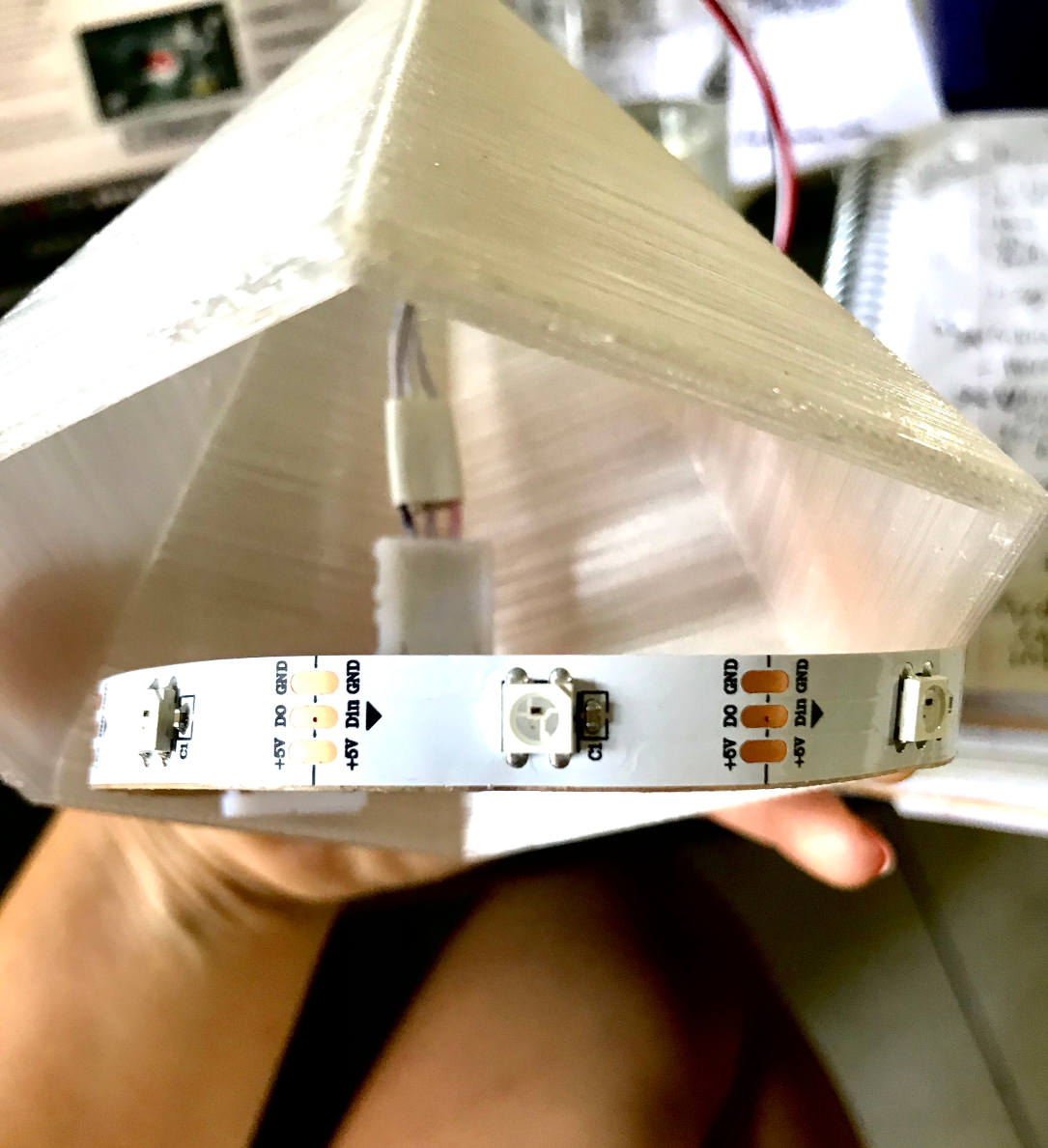

Although just a simple shape that lights up on command, the plumbob actually has some rather complex technology behind it. The model itself was created in a 3-d CAD (computer-aided design) program and then exported as a -.stl file that a 3-d printer could recognize. Through a process called “additive manufacturing,” the 3-d printer then built up layers of plastic filament to create the final shape. The printing process took several hours to manufacture this seemingly simple design. The material used to print the plumbob was a semitransparent plastic. In order to make the plumbob shine, an addressable LED light strip (where each light is controlled by a small microchip) was placed inside the plumbob, with wires carrying power and data inserted through the top of the plumbob. The microchips control tiny arrays of red, green, and blue LEDs that can be programmed to combine with different brightness settings to reproduce different colors and patterns that match the vocalized words shown below:

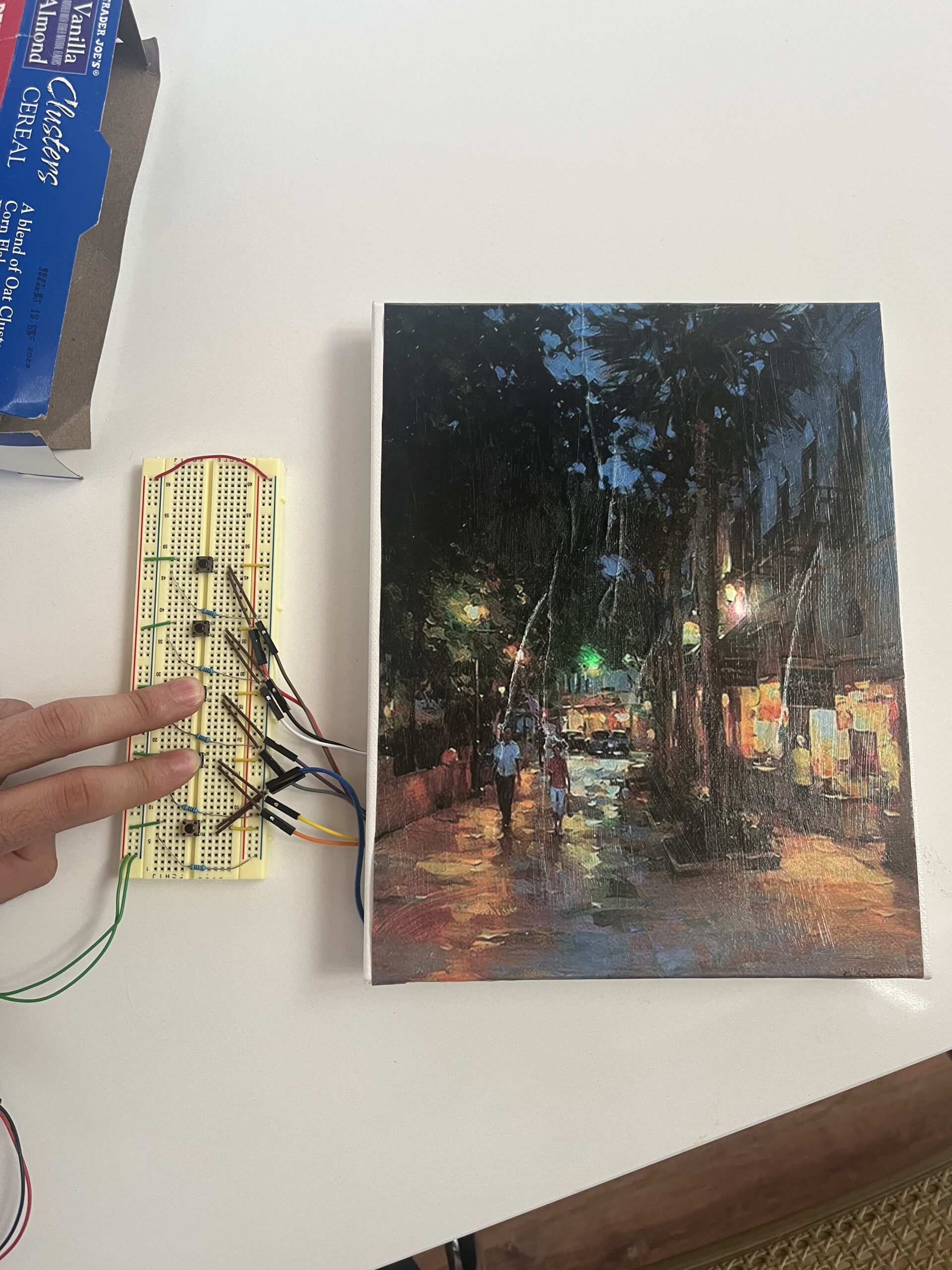

The voice recognition technology used to make the plumbob’s light respond to one’s voice is the Google Voice Kit 2.0. The device resides in a small cardboard box, and allows for a do-it-yourself artificial intelligence approach to speech recognition; essentially, this kit allows speech recorded by a microphone to be uploaded via an internet connection where Google servers interpret the sounds to determine what words were said. This data is then sent back to the Voice Kit, where it can be parsed by the code of your program written in a programming language called Python to determine what action to take. Examples of Python programs designed to work with the Google Voice Kit are available in the Voice Kit’s online maker ‘s guide. The kit houses a microphone, a speaker, and two circuit boards: a Raspberry Pi (a stand-alone micro-computer) and the Google Voice HAT (Hardware Attached on Top), which attaches to the Raspberry Pi board and helps facilitate audio capture and playback, and connects to Google services housed online. By allowing it to send data to internet-based services, this kit makes this project a true IoT (Internet of Things) device.

Why It Matters

We may not think that video games have very much to do with writing. Part of the significance of this project is in bringing a seemingly untouchable, digital world like The Sims to fruition by creating a physical interface. Modern technology consistently works to make its user comfortable enough to forget that the interfaces they’re interacting with are actually present, and I want my project to enable my users to openly interact and have my technology provide feedback in an immersive environment. Creating immersion is another step in creating a more fluid version of both the real and virtual worlds. This project also matters because it allowed me to engage in a process where literacies were developed throughout production. After examining the demo code to understand how it responded to voice commands like “turn on the light” and “blink, I was able to trace how it communicated with various physical and online technologies. In order to program my own phrases, I had to create an “expect phrase” the voice kit could listen for and accurately identify before a command could be issued. Next, I added a “handle phrase”–code that performed the requested action. I ran into some troubleshooting issues trying to make the LED lights do what I needed directly using the Google Voice Kit 2.0 so I employed the help of a serial cable connection and (a technology we had used earlier in the class) an Arduino Uno–an open source microcontroller that you can load code onto and which acts as a command module, turning the LED lights on and off or changing their color when an input is received. The serial connection acted as a way to connect two computers (in this case, link the Arduino with the Google Voice Kit), sending just one bit of information at a time to trigger light changes. Keeping the amount of information transferred low also allowed me to keep the Arduino code (written in a language called C) much simpler than if the Arduino had to identify commands sent as longer strings of characters. Project like this matter because they challenge us to develop these new literacies and troubleshooting techniques that lead to applied learning connected to our rhetorical purposes.